On this page we present a number of different charts relating to Nvidia's DLSS 4 suite of technologies. I do recommend watching the video as image quality analysis is important when considering the DLSS 4 numbers, but I'll explain each chart here.

New Transformer DLSS model

The first thing to look at is the introduction of a new Transformer-based DLSS algorithm. Nvidia has suggested that it pushed the old DLSS upscaler as far as it can go in terms of image quality, and that switching to the new, more complex Transformer model instead of the older Convolutional Neural Network (CNN) model uses ‘2x more parameters and 4x more compute to provide greater stability, reduced ghosting, higher details and enhanced anti-aliasing in game scenes.' (Source).

The good news here is that this actually applies to all RTX GPUs! That's right, the Transformer model is compatible with cards back to the 20-series, and it works with both Super Resolution and Ray Reconstruction.

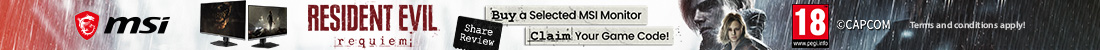

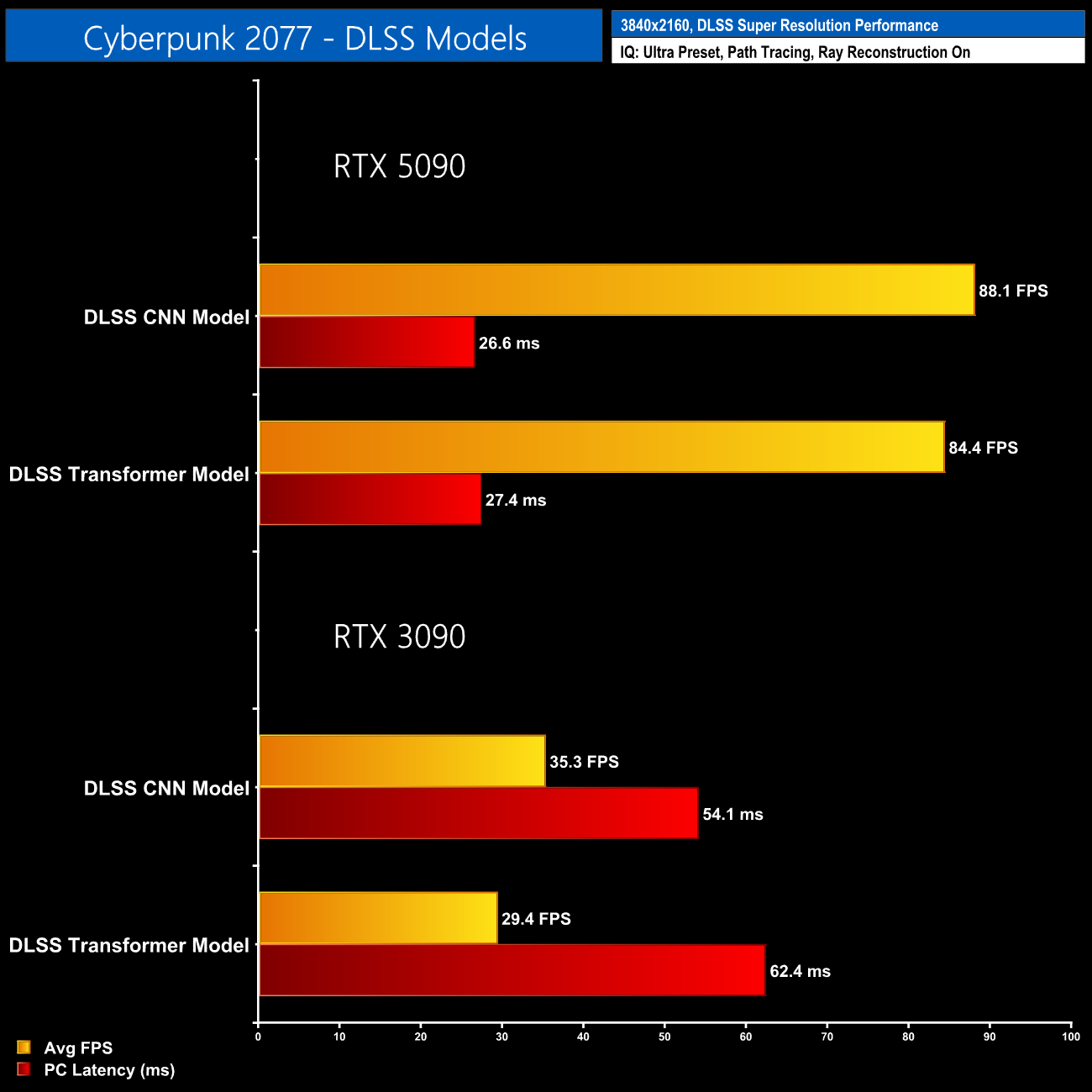

In the video I do spot visual benefits compared to the older model, but it's also worth making it clear there is a slight performance cost compared to the CNN model. In Cyberpunk 2077 – Ultra settings, path tracing on, 4K with Super Resolution Performance and Ray Reconstruction enabled – I saw a 4fps reduction on the 5090. However, the cost is greater on older GPUs, with the RTX 3090 dropping from 35fps to 29fps.

Putting it another way, the cost for a 5090 to use the Transformer model is just 4% of your fps, whereas for a 3090 it increases to a 17% cost over the CNN model. That may mean it isn't always viable for those on older GPUs, but it's great to see improvement being made for all RTX owners.

DLSS Multi Frame Generation

Multi Frame Generation (MFG) is what's new and currently exclusive to the 50 series however. As we know, Frame Gen debuted with the 40-series and it uses AI to insert one generated frame between each traditionally rendered frame. Now, Nvidia has not only overhauled the Frame Gen model itself, but it can generate up to three additional frames for every traditionally rendered frame.

Again, this has implications for image quality given we know an AI-generated frame isn't always a perfect match for one that's been cranked out on the CUDA cores, so be sure to check the video for the visual comparison. Anyone who's used frame gen in the past, however, will know what sort of thing to expect – certain UI elements don't always play nice, there is still some fizzling around fast-moving objects, and translucent objects or things intersecting with transparencies can cause visual difficulties.

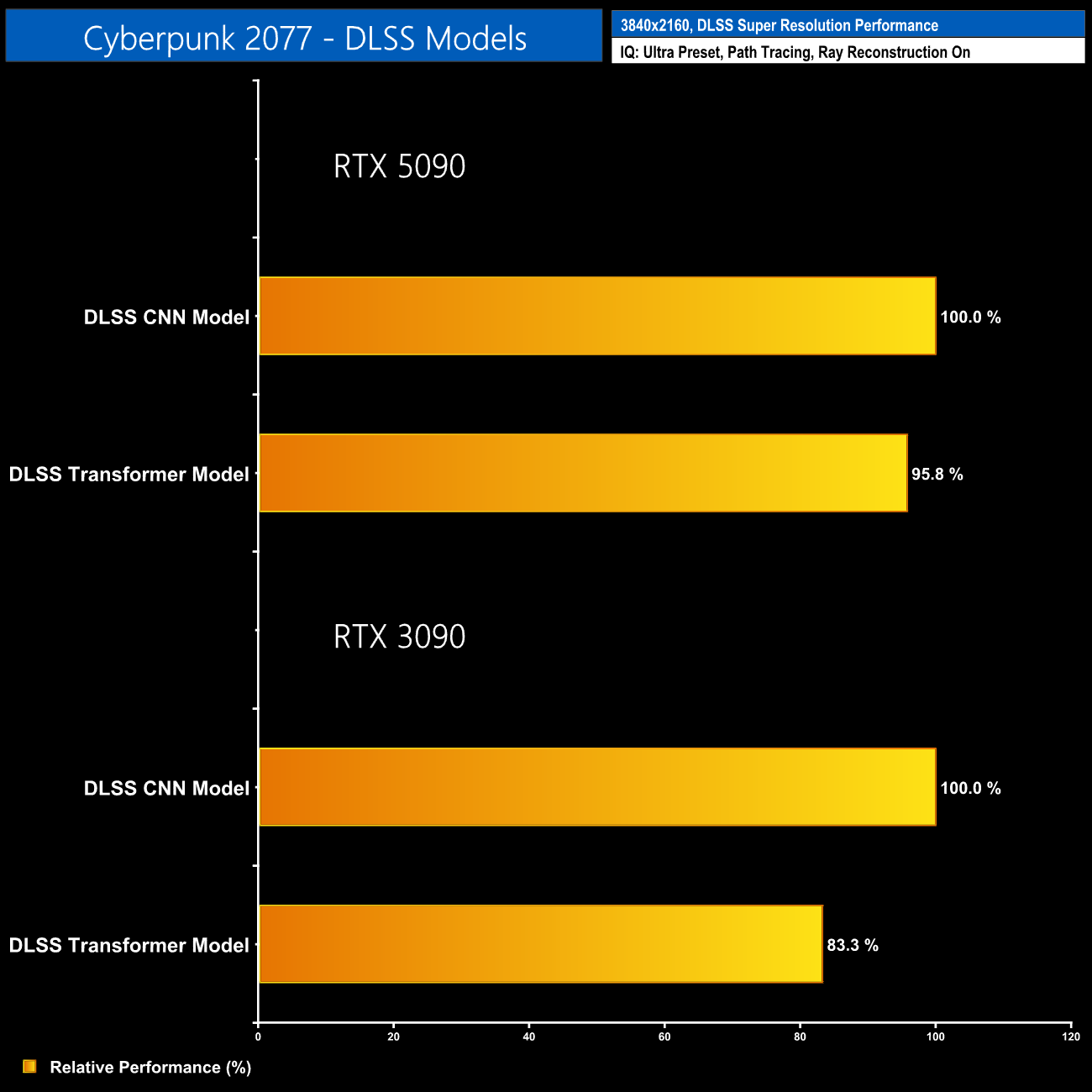

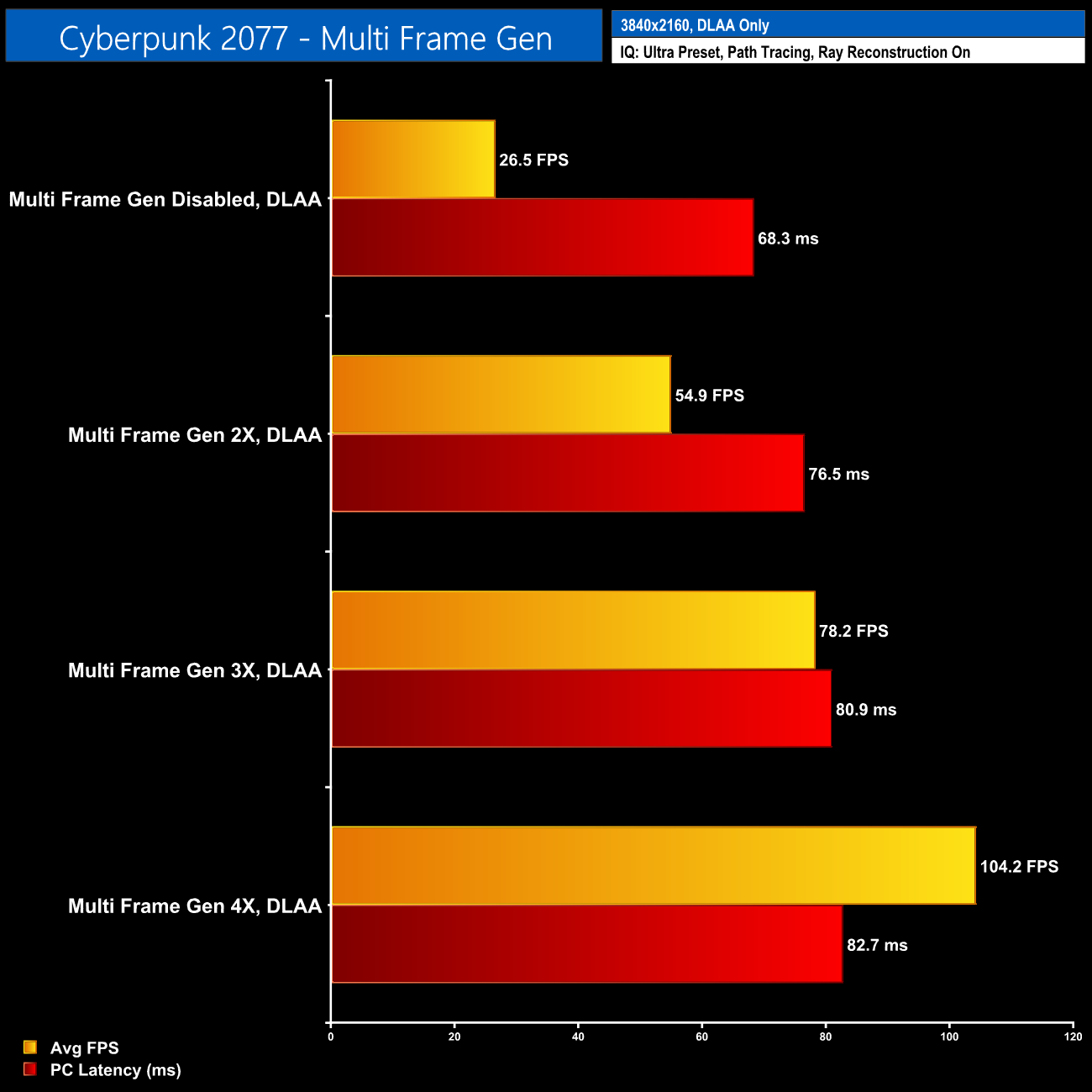

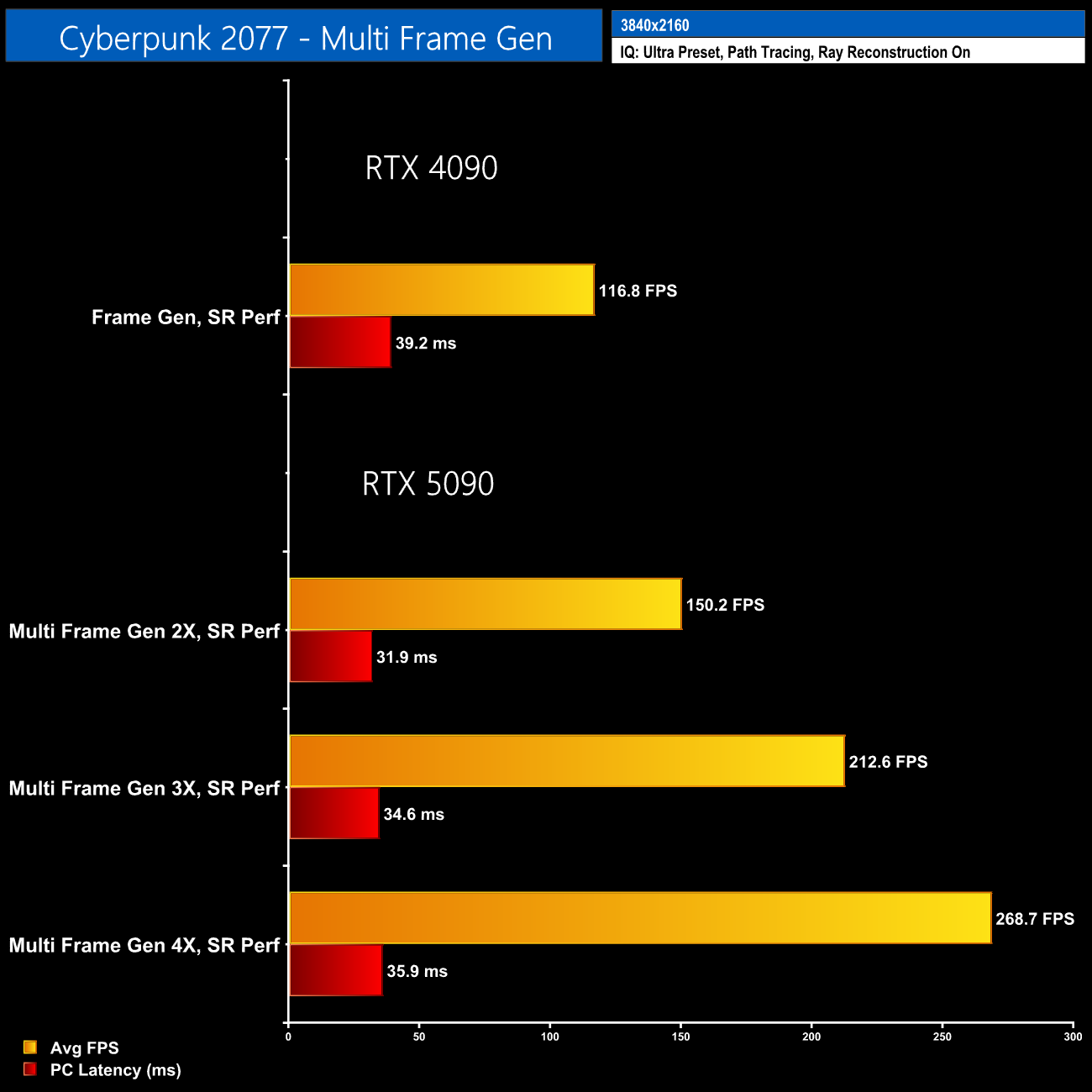

For the most part however, I'd say the increase to visual smoothness is well worth the occasional hiccup, though everyone will have their own preference when it comes to the visual quality. In terms of frame rate numbers, above I tested with MFG disabled using DLAA, MFG disabled using Super Resolution Performance, and then MFG 2X, MFG 3X and MFG 4X, all using Super Resolution Performance.

It's worth stressing that the idea is not to just whack on MFG 4X for the highest frame rate. Rather, the 2X, 3X or 4X settings are designed to give you options to get as close to your monitor's maximum refresh rate – with frame gen and the added latency (which we will get to shortly), there's no longer a benefit to exceeding your monitor's max refresh, so if you have a 144Hz display, it's likely that MFG 2X is all you need. For those using 4K/240Hz screens, MFG 4X could be required to hit that refresh rate target.

Speaking of latency however, this is one key area of discussion as Frame Gen diverges from what we'd typically expect from a frame rate increase. That's because, despite frame rate going up, latency does not go down – it actually increases given how the algorithm has to buffer one traditionally rendered frame in order to squeeze the AI generated frames in between.

To show how latency scales in a complete apples-to-apples test, I used DLAA – instead of Super Resolution – first with MFG Off, and then the 2X, 3X and 4X modes. You can see that latency is only going up, despite the frame rate increasing.

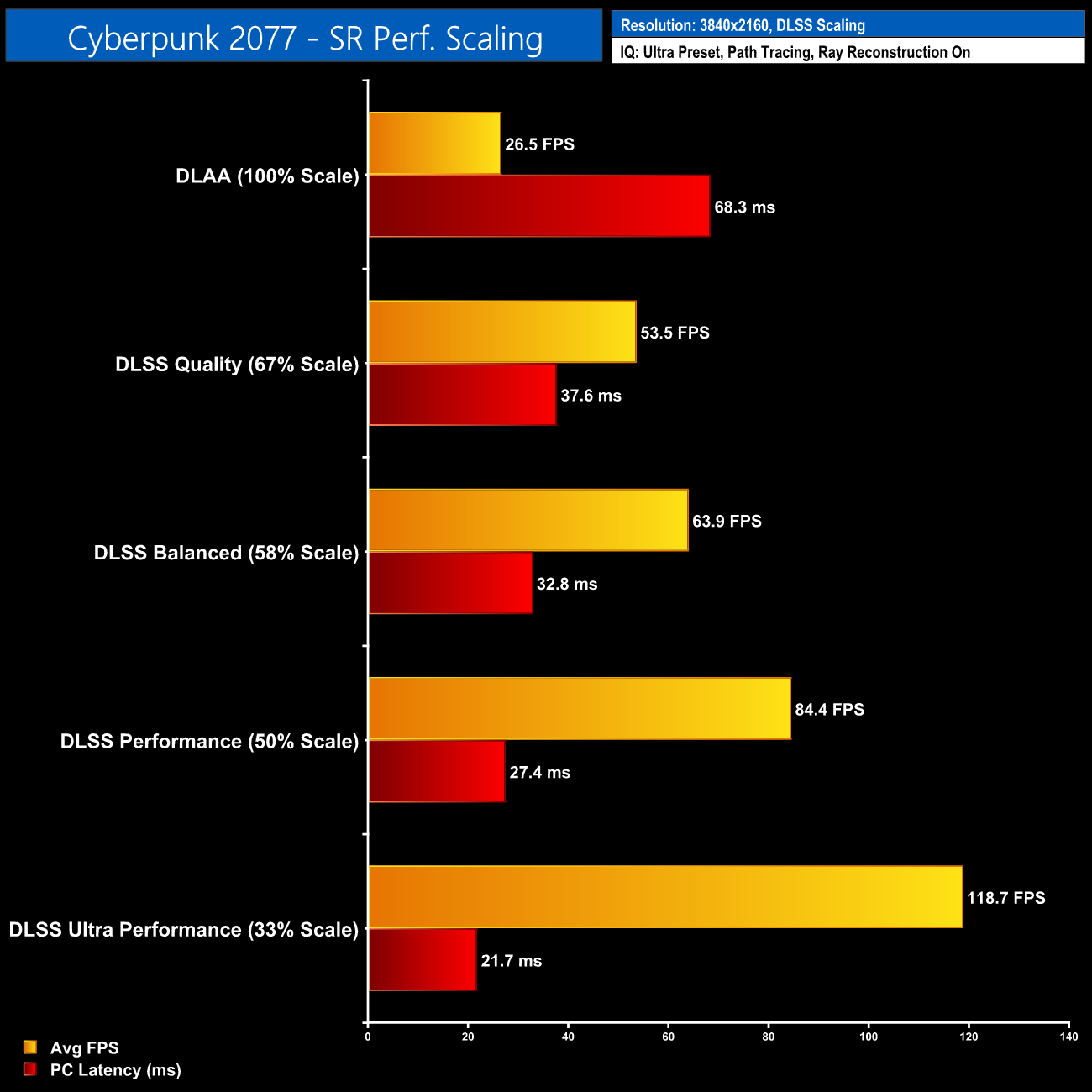

That's a paradigm shift compared to what we've had before, as typically latency goes down as frame rate goes up. We can see a clear example of that when looking at Super Resolution scaling in the chart above – the lower the internal resolution, the higher the frame rate, the lower the latency. That's why Nvidia claims DLSS 4 will improve latency versus native, because Super Resolution can drop it significantly, to the point where even with Frame Gen added in the mix, it's lower than what we saw from a true native render.

In a nutshell, while MFG 4X gives you the visual fluidity of 240FPS, it does not improve the latency to match – so you are left with a similar, if not slightly worse, ‘feel' than if the technology wasn't enabled to begin with.

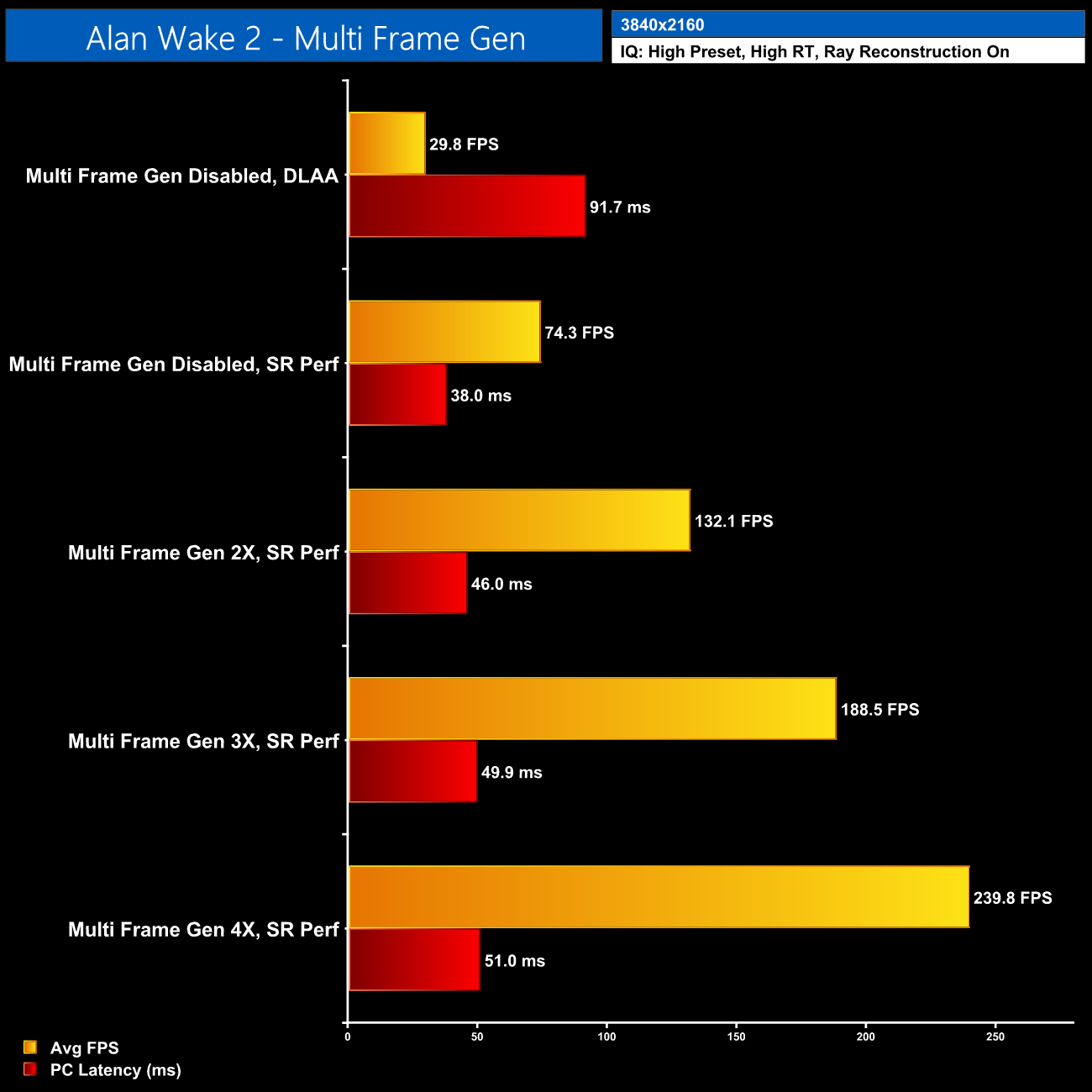

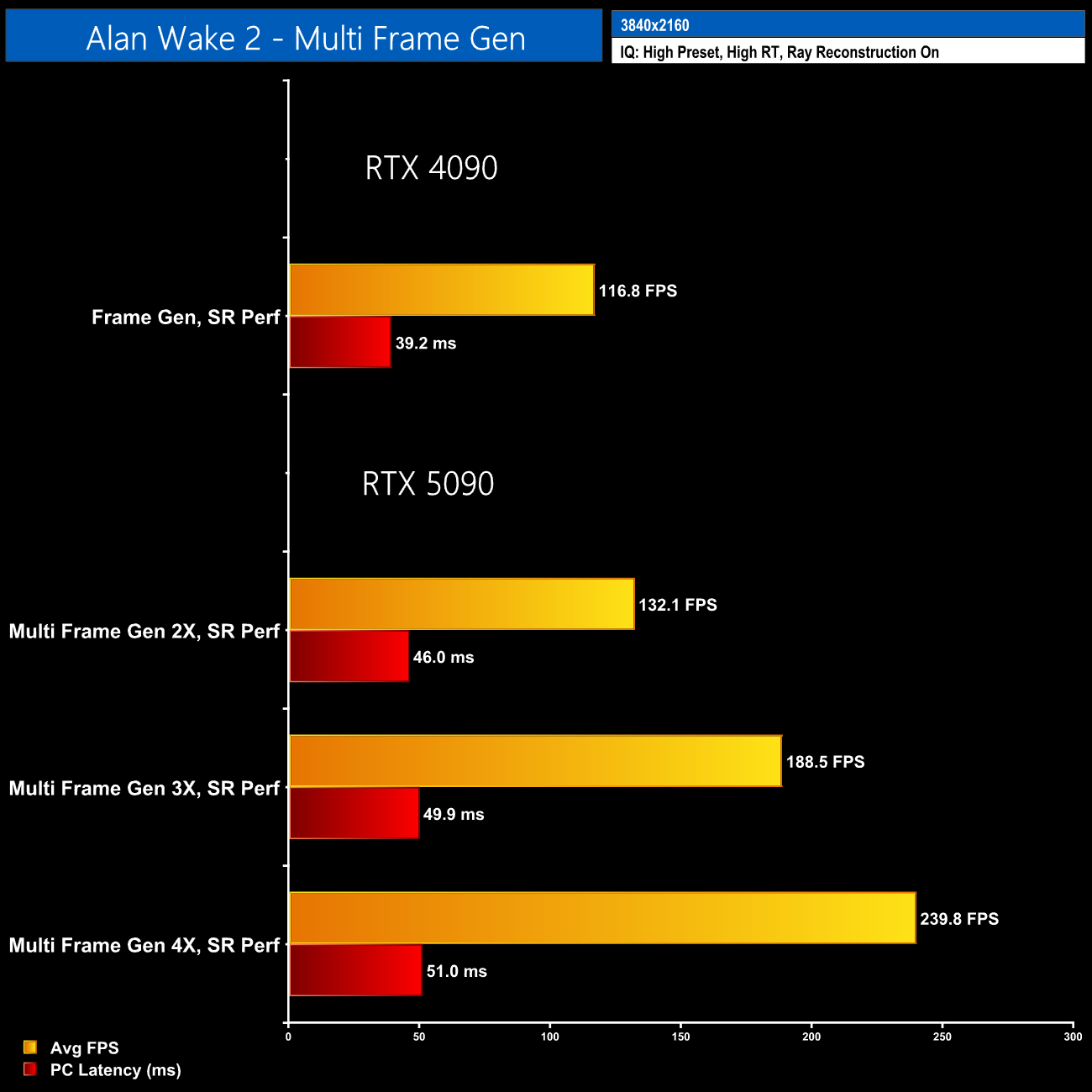

Just to show another example of MFG in action, I also tried out Alan Wake 2. Now, as mentioned earlier in the review, I have been having frame time issues with the 5090, but it still shows the raw average fps increases that can be had with DLSS. Once more, though, latency is increased with the technology enabled – we see 51ms of PC latency when using MFG 4X alongside Super Resolution Performance mode, compared to 38ms when just using Super Resolution Performance. Again, that means there is a disconnect between the visual fluidity and the input – how sensitive you are to this can vary from person to person.

I do think its easier to get away with the added latency in slower, third-person games like Alan Wake – for first person games, there feels like less of a disconnect between you and the character's movements, so any extra latency is more easily felt. Third-person games seem to give you more of a ‘buffer zone' when it comes to the feel of the game, though maybe that's just me!

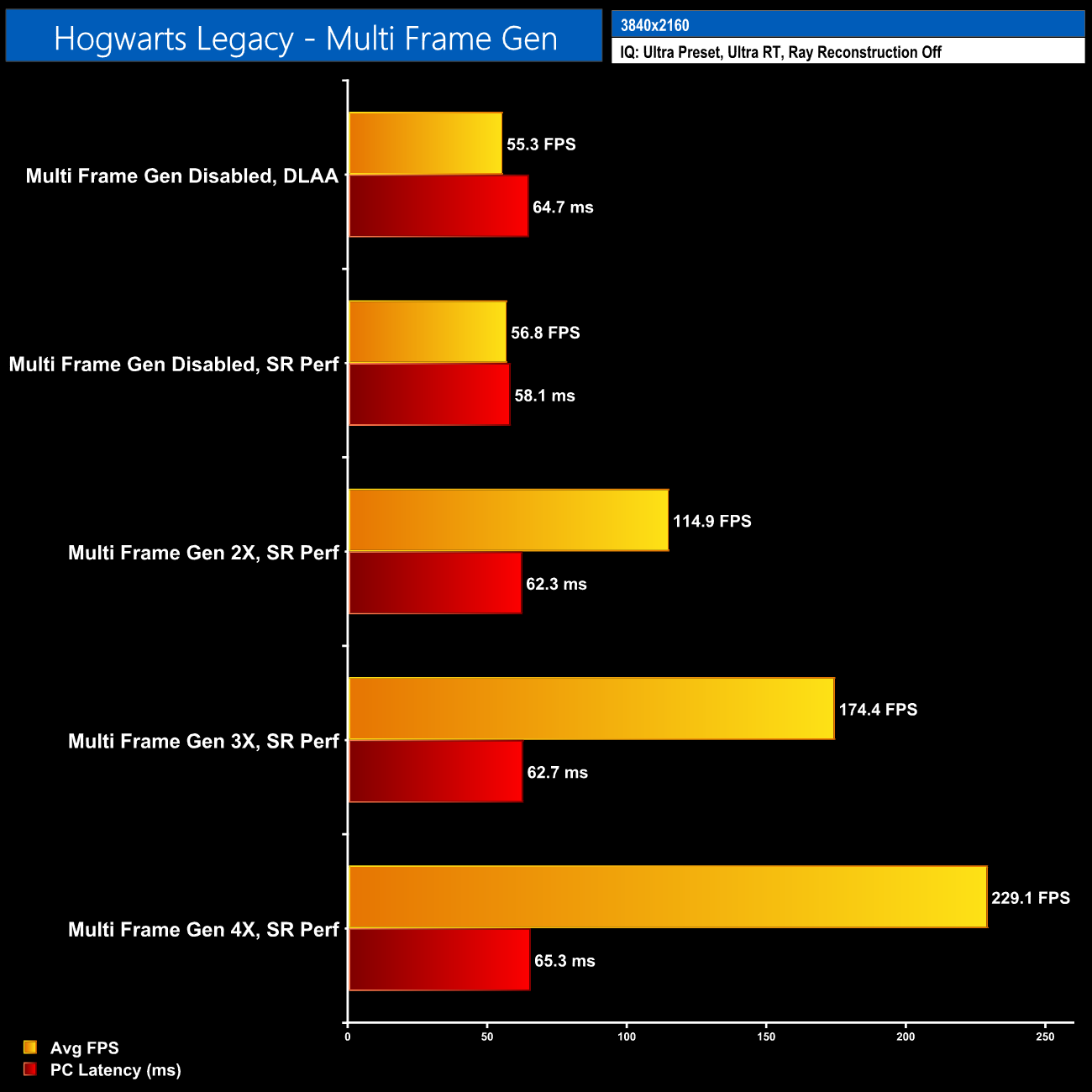

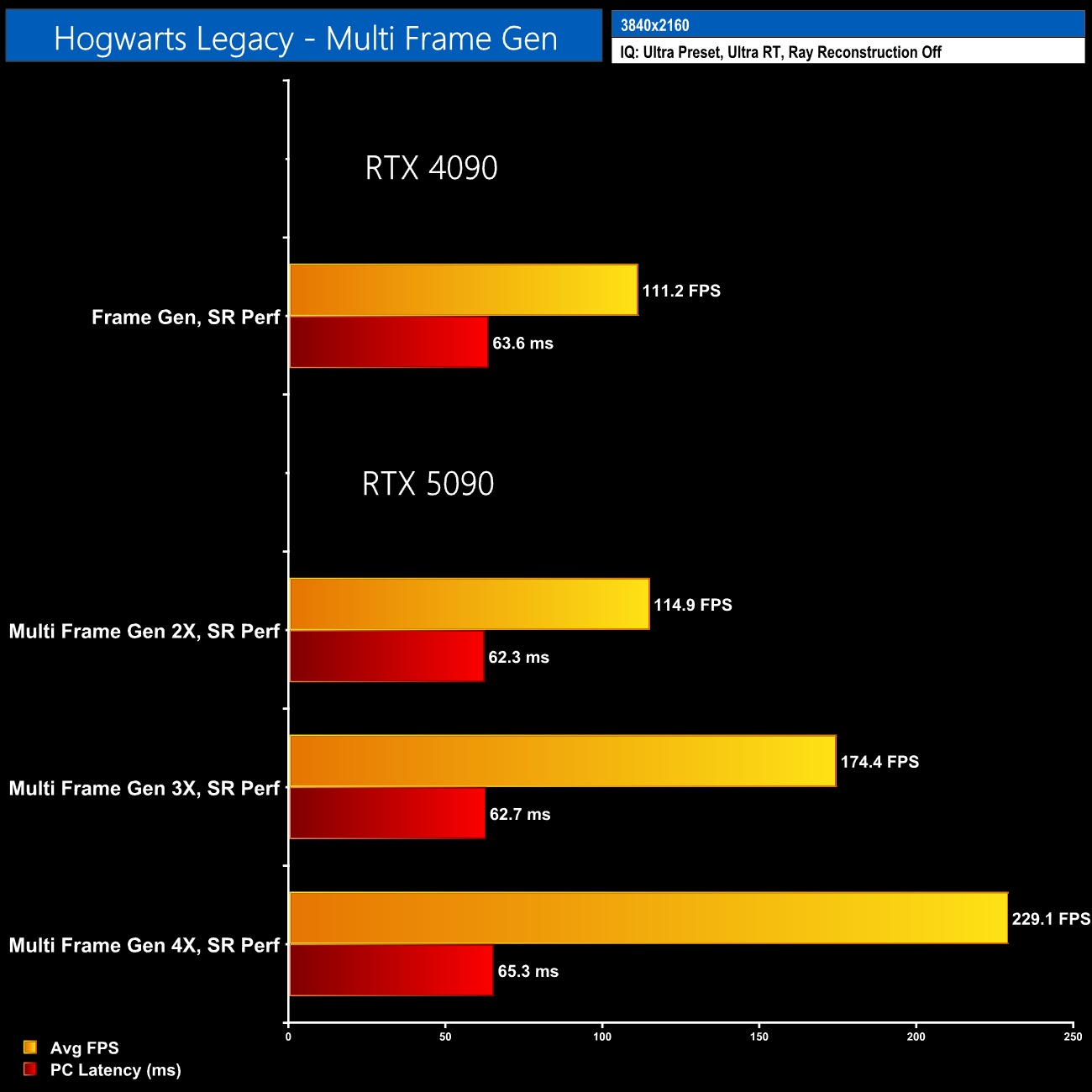

Hogwarts Legacy is a slightly different example and it's very interesting because it gives us an insight as to what MFG can do in CPU-bound games. As we can see in the above chart, there is no performance gain to be had when moving from DLAA to DLSS Super Resolution Performance on the RTX 5090 simply because we are so CPU bottlenecked, even at 4K. It's only when enabling MFG that frame rates increase, first to 115fps with MFG 2X, up to 229fps with MFG 4X. And as the 5090 is so CPU bottlenecked, latency increases by much smaller amounts – though it's still going up, rather than down as we'd typically expect of a frame rate increase.

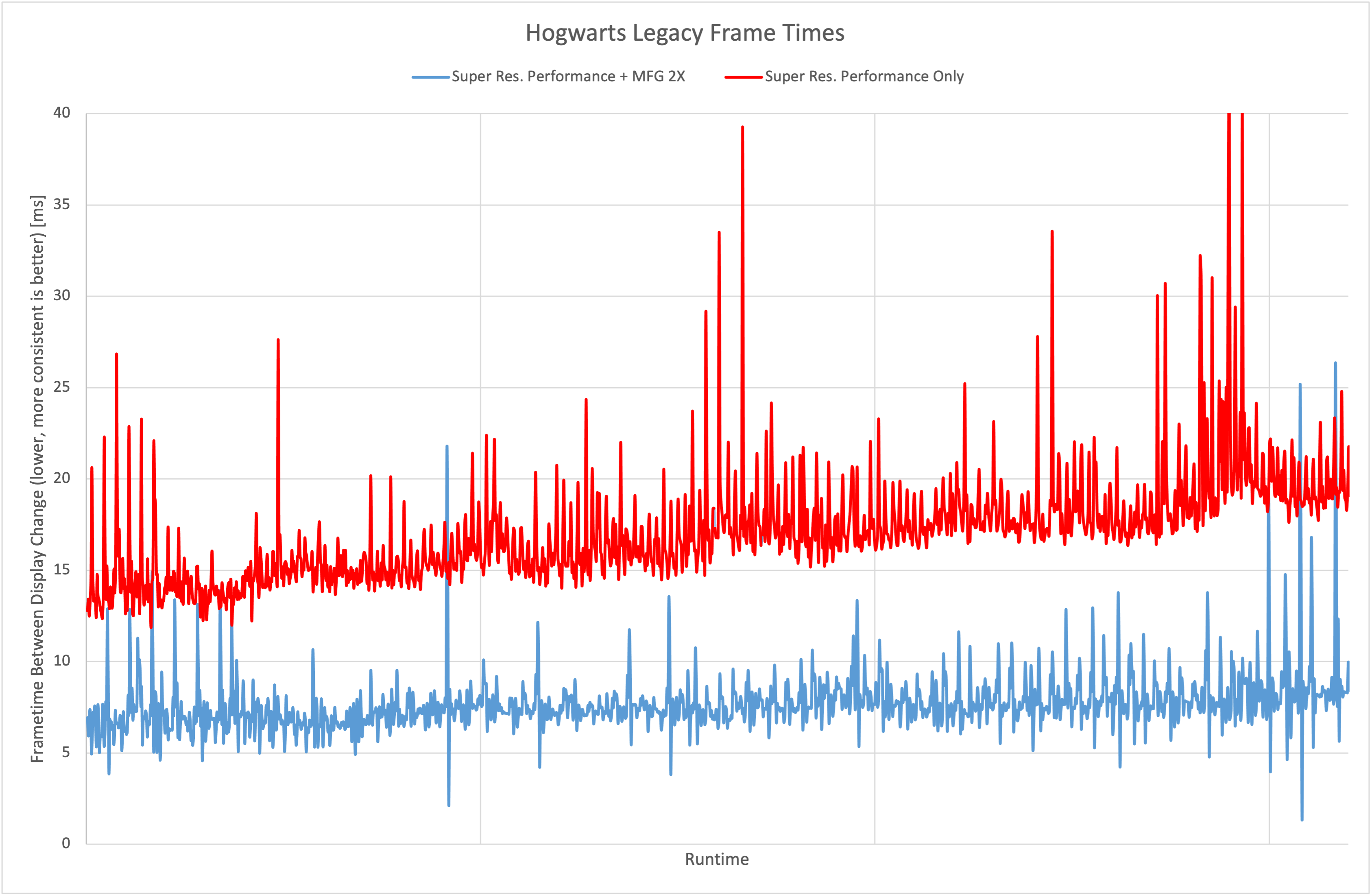

The snag is that the frame pacing in Hogwarts Legacy has always been terrible, and MFG cannot fix that. Here, for instance, we're comparing Super Resolution Performance mode without MFG, to the same upscaling but with MFG 2X enabled. Yes, the overall frame times are lower, as the frame rate has increased, but the consistency is still very poor and there's a number of large spikes. To be clear, that's not caused by MFG, that's just the game – hopefully it'll get fixed one day.

Performance versus RTX 4090

As a final example, I've included some comparisons to the RTX 4090, which only supports ‘Frame Generation' rather than ‘Multi Frame Generation' – i.e. the insertion of one AI-generated frame rather than up to three on the RTX 5090. As such, that means you can get significantly higher frame rates with the 5090, which is how Nvidia devised its ‘up to 2x faster' marketing slides that generated all that discussion earlier in the month. I'm presenting these charts with otherwise no comment – if the idea of generating more frames is valuable to you, then great, the 5090 can often double the frame rate of a 4090 with MFG 4X. If not, we can move on!

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards